Heat

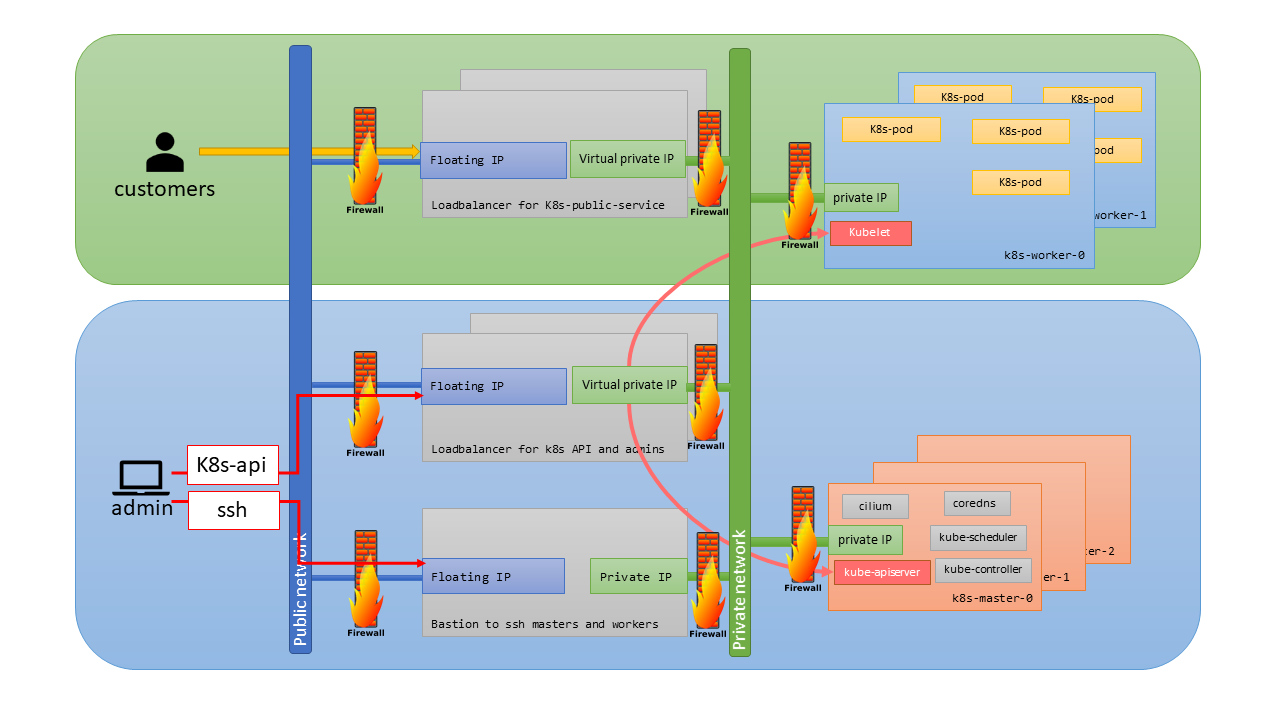

Create in a few minutes the complete network architecture for the deployment of a kubernetes cluster

All deployment files: Infomaniak resources

Install dependencies

To begin with, let's set up the virtual environment to install all the useful dependencies therein.

python3 -m venv venv

source venv/bin/active

pip3 install -r requirements.txt

source /Users/myuser/PCP-XXXX-openrc.sh

openstackclient

python-heatclient

python-swiftclient

python-glanceclient

python-novaclient

gnocchiclient

python-cloudkittyclient

Create the Openstack infrastructure

openstack stack create -t cluster.yaml --parameter whitelisted_ip_range="10.8.0.0/16" stack name --wait

Create a directory named heat_k8s:

mkdir heat_k8s

bastion.yaml:

heat_template_version: 2016-10-14

parameters:

image:

type: string

description: Image used for VMs

default: Ubuntu 20.04 LTS Focal Fossa

ssh_key:

type: string

description: SSH key to connect to VMs

default: yubikey

flavor:

type: string

description: flavor used by the bastion

default: a1-ram2-disk20-perf1

whitelisted_ip_range:

type: string

description: ip range allowed to connect to the bastion and the k8s api server

network:

type: string

description: Network used by the VM

subnet:

type: string

description: Subnet used by the VM

resources:

bastion_floating_ip:

type: OS::Neutron::FloatingIP

properties:

floating_network: ext-floating1

bastion_port:

type: OS::Neutron::Port

properties:

name: bastion

network: {get_param: network}

fixed_ips:

- subnet_id: {get_param: subnet}

security_groups:

- {get_resource: securitygroup_bastion}

bastion_instance:

type: OS::Nova::Server

properties:

name: bastion

key_name: { get_param: ssh_key }

image: { get_param: image }

flavor: { get_param: flavor }

networks: [{port: {get_resource: bastion_port} }]

association:

type: OS::Neutron::FloatingIPAssociation

properties:

floatingip_id: { get_resource: bastion_floating_ip }

port_id: { get_resource: bastion_port }

securitygroup_bastion:

type: OS::Neutron::SecurityGroup

properties:

description: Bastion SSH

name: bastion

rules:

- protocol: tcp

remote_ip_prefix: {get_param: whitelisted_ip_range}

port_range_min: 22

port_range_max: 22

outputs:

bastion_public_ip:

description: Floating IP of the bastion host

value: {get_attr: [bastion_floating_ip, floating_ip_address]}

master.yaml:

---

heat_template_version: 2016-10-14

description: A load-balancer server

parameters:

name:

type: string

description: Server name

image:

type: string

description: Image used for servers

ssh_key:

type: string

description: SSH key to connect to VMs

flavor:

type: string

description: flavor used by the servers

pool_id:

type: string

description: Pool to contact

metadata:

type: json

network:

type: string

description: Network used by the server

subnet:

type: string

description: Subnet used by the server

security_groups:

type: string

description: Security groups used by the server

allowed_address_pairs:

type: json

default: []

servergroup:

type: string

resources:

port:

type: OS::Neutron::Port

properties:

network: {get_param: network}

security_groups: [{get_param: security_groups}]

allowed_address_pairs: {get_param: allowed_address_pairs}

server:

type: OS::Nova::Server

properties:

name: {get_param: name}

flavor: {get_param: flavor}

image: {get_param: image}

key_name: {get_param: ssh_key}

metadata: {get_param: metadata}

networks: [{port: {get_resource: port} }]

scheduler_hints:

group: {get_param: servergroup}

member:

type: OS::Octavia::PoolMember

properties:

pool: {get_param: pool_id}

address: {get_attr: [server, first_address]}

protocol_port: 6443

subnet: {get_param: subnet}

outputs:

server_ip:

description: IP Address of master nodes.

value: { get_attr: [server, first_address] }

lb_member:

description: LB member details.

value: { get_attr: [member, show] }

worker.yaml:

---

heat_template_version: 2016-10-14

description: A Kubernetes worker node

parameters:

name:

type: string

description: Server name

image:

type: string

description: Image used for servers

ssh_key:

type: string

description: SSH key to connect to VMs

flavor:

type: string

description: flavor used by the servers

metadata:

type: json

network:

type: string

description: Network used by the server

security_groups:

type: string

description: Security groups used by the server

allowed_address_pairs:

type: json

default: []

servergroup:

type: string

resources:

port:

type: OS::Neutron::Port

properties:

network: {get_param: network}

security_groups: [{get_param: security_groups}]

allowed_address_pairs: {get_param: allowed_address_pairs}

server:

type: OS::Nova::Server

properties:

name: {get_param: name}

flavor: {get_param: flavor}

image: {get_param: image}

key_name: {get_param: ssh_key}

metadata: {get_param: metadata}

networks: [{port: {get_resource: port} }]

scheduler_hints:

group: {get_param: servergroup}

outputs:

server_ip:

description: IP Address of the worker nodes.

value: { get_attr: [server, first_address] }

cluster.yaml:

heat_template_version: 2016-10-14

description: Kubernetes cluster

parameters:

image:

type: string

description: Image used for VMs

default: Ubuntu 20.04 LTS Focal Fossa

ssh_key:

type: string

description: SSH key to connect to VMs

default: your-key

master_flavor:

type: string

description: flavor used by master nodes

default: a2-ram4-disk80-perf1

worker_flavor:

type: string

description: flavor used by worker nodes

default: a4-ram8-disk80-perf1

bastion_flavor:

type: string

description: flavor used by the bastion

default: a1-ram2-disk20-perf1

whitelisted_ip_range:

type: string

description: ip range allowed to connect to the bastion and the k8s api server

default: 10.8.0.0/42

subnet_cidr:

type: string

description: cidr for the private network

default: 10.11.12.0/24

kube_service_addresses:

type: string

description: Kubernetes internal network for services.

default: 10.233.0.0/18

kube_pods_subnet:

type: string

description: Kubernetes internal network for pods.

default: 10.233.64.0/18

resources:

# Private Network

k8s_net:

type: OS::Neutron::Net

properties:

name: k8s-network

value_specs:

mtu: 1500

k8s_subnet:

type: OS::Neutron::Subnet

properties:

name: k8s-subnet

network_id: {get_resource: k8s_net}

cidr: {get_param: subnet_cidr}

dns_nameservers:

- 84.16.67.69

- 84.16.67.70

ip_version: 4

# router between loadbalancer and private network

k8s_router:

type: OS::Neutron::Router

properties:

name: k8s-router

external_gateway_info: { network: ext-floating1 }

k8s_router_subnet_interface:

type: OS::Neutron::RouterInterface

properties:

router_id: {get_resource: k8s_router}

subnet: {get_resource: k8s_subnet}

# master nodes

group_masters:

type: OS::Nova::ServerGroup

properties:

name: k8s-masters

policies: ["anti-affinity"]

k8s_masters:

type: OS::Heat::ResourceGroup

properties:

count: 3

resource_def:

type: master.yaml

properties:

name: k8s-master-%index%

servergroup: {get_resource: group_masters}

flavor: {get_param: master_flavor}

image: {get_param: image}

ssh_key: {get_param: ssh_key}

network: {get_resource: k8s_net}

subnet: {get_resource: k8s_subnet}

pool_id: {get_resource: pool_masters}

metadata: {"metering.server_group": {get_param: "OS::stack_id"}}

security_groups: {get_resource: securitygroup_masters}

allowed_address_pairs:

- ip_address: {get_param: kube_service_addresses}

- ip_address: {get_param: kube_pods_subnet}

securitygroup_masters:

type: OS::Neutron::SecurityGroup

properties:

description: K8s masters

name: k8s-masters

rules:

- protocol: icmp

remote_ip_prefix: {get_param: subnet_cidr}

- protocol: tcp

direction: ingress

remote_ip_prefix: {get_param: subnet_cidr}

- protocol: udp

direction: ingress

remote_ip_prefix: {get_param: subnet_cidr}

# worker nodes

group_workers:

type: OS::Nova::ServerGroup

properties:

name: k8s-workers

policies: ["anti-affinity"]

k8s_workers:

type: OS::Heat::ResourceGroup

properties:

count: 2

resource_def:

type: worker.yaml

properties:

name: k8s-worker-%index%

servergroup: {get_resource: group_workers}

flavor: {get_param: worker_flavor}

image: {get_param: image}

ssh_key: {get_param: ssh_key}

network: {get_resource: k8s_net}

metadata: {"metering.server_group": {get_param: "OS::stack_id"}}

security_groups: {get_resource: securitygroup_workers}

allowed_address_pairs:

- ip_address: {get_param: kube_service_addresses}

- ip_address: {get_param: kube_pods_subnet}

securitygroup_workers:

type: OS::Neutron::SecurityGroup

properties:

description: K8s workers

name: k8s-workers

rules:

- protocol: icmp

remote_ip_prefix: {get_param: subnet_cidr}

- protocol: tcp

direction: ingress

remote_ip_prefix: {get_param: subnet_cidr}

- protocol: udp

direction: ingress

remote_ip_prefix: {get_param: subnet_cidr}

# k8s api loadbalancer

loadbalancer_k8s_api:

type: OS::Octavia::LoadBalancer

properties:

name: k8s-api

vip_subnet: {get_resource: k8s_subnet}

listener_masters:

type: OS::Octavia::Listener

properties:

name: k8s-master

loadbalancer: {get_resource: loadbalancer_k8s_api}

protocol: HTTPS

protocol_port: 6443

allowed_cidrs:

- {get_param: whitelisted_ip_range}

- {get_param: subnet_cidr}

- {get_param: kube_service_addresses}

- {get_param: kube_pods_subnet}

pool_masters:

type: OS::Octavia::Pool

properties:

name: k8s-master

listener: {get_resource: listener_masters}

lb_algorithm: ROUND_ROBIN

protocol: HTTPS

session_persistence:

type: SOURCE_IP

monitor_masters:

type: OS::Octavia::HealthMonitor

properties:

pool: { get_resource: pool_masters }

type: HTTPS

url_path: /livez?verbose

http_method: GET

expected_codes: 200

delay: 5

max_retries: 5

timeout: 5

loadbalancer_floating:

type: OS::Neutron::FloatingIP

properties:

floating_network_id: ext-floating1

port_id: {get_attr: [loadbalancer_k8s_api, vip_port_id]}

# bastion

bastion:

type: bastion.yaml

depends_on: [ k8s_net, k8s_router ]

properties:

image: {get_param: image}

ssh_key: { get_param: ssh_key }

flavor: { get_param: bastion_flavor }

whitelisted_ip_range: {get_param: whitelisted_ip_range}

network: {get_resource: k8s_net}

subnet: {get_resource: k8s_subnet}

#bastion_floating_ip: {get_resource: }

outputs:

bastion_public_ip:

description: Floating IP of the bastion host

value: {get_attr: [bastion, resource.bastion_floating_ip, floating_ip_address]}

k8s_masters:

description: Masters ip addresses

value: {get_attr: [k8s_masters, server_ip]}

k8s_workers:

description: Workers ip addresses

value: {get_attr: [k8s_workers, server_ip]}

vrrp_public_ip:

description: vrrp public ip

value: {get_attr: [loadbalancer_floating, floating_ip_address]}

These templates correspond to the architectural definition from an Openstack point of view of your different components of your future cluster with ip addressing, traffic rules, ssh key management, all of these components are called in the cluster manifest. yaml which aims to organize and deploy all the resources defined to create your infrastructure ready to host the installation of kubernertes. To add this ssh key to the machines provided by these templates add the name of your key given by the command:

openstack keypair list

ssh_key:

type: string

description: SSH key to connect to VMs

default: your-key

openstack rating dataframes get -b 2021-08-25T00:00:00 | awk -F 'rating' '{print $2}' | awk -F "'" '{sum+=$3} END{print sum/50}'

openstack stack update -t cluster.yaml --parameter whitelisted_ip_range="10.8.0.0/16" stack-name --wait

openstack stack delete k8s --wait

To view the security groups of your master nodes, worker nodes:

openstack security group rule list k8s-masters

openstack security group rule list k8s-workers