Manage multiple clusters

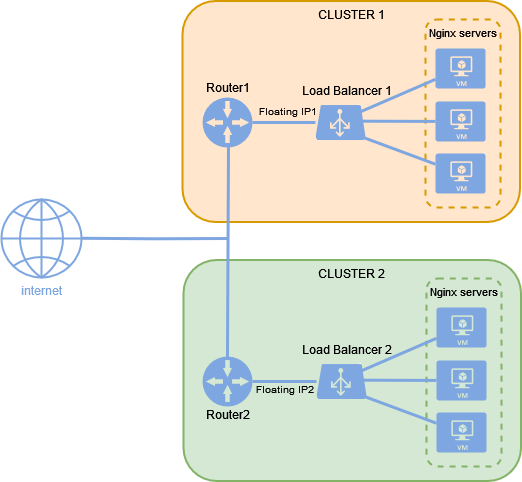

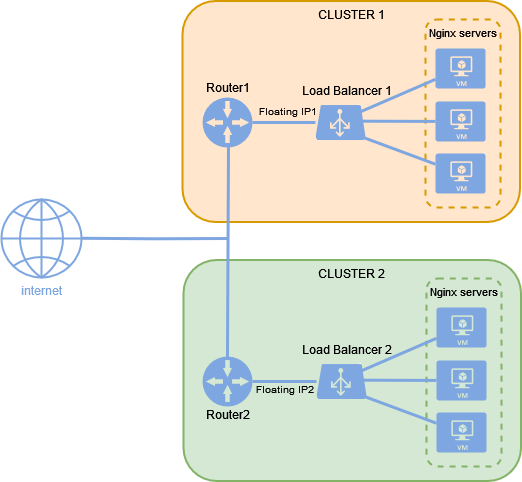

In this section, we are using Terraform to deploy two separate clusters to the same region.

Each cluster is composed of a load balancer redirecting in a round robin manner

the traffic to 3 VMs running a simple nginx server listening on port 80.

Requirements

Follow Getting started to setup your Terraform environment.

First, configure the provider to use Infomaniak Public Cloud :

| providers.tf |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15 | terraform {

required_version = ">= 0.14.0"

required_providers {

openstack = {

source = "terraform-provider-openstack/openstack"

version = "~> 2.0.0"

}

}

}

# Configure the OpenStack Provider

provider "openstack" {

cloud = "PCP-XXXXXXX"

use_octavia = true # (1)!

}

|

- We explicitly enable octavia support of Octavia to manage loadbalancers.

This is only needed in older version of the Terraform provider

for OpenStack.

This is only needed in older version of the Terraform provider

for OpenStack.

Then, add some variables to manage infrastructure :

| variables.tf |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35 | # Public Network name

variable "public-net" {

default = "ext-floating1"

}

# Flavor for cluster1 instances

variable "cluster1_flavor_name" {

default = "a2-ram4-disk20-perf1"

}

# Flavor for cluster2 instances

variable "cluster2_flavor_name" {

default = "a2-ram4-disk20-perf1"

}

# Image for instances

variable "image_name" {

default = "Debian 12 bookworm"

}

# Number of instance in each clusters

variable "number_of_instances" {

type = number

default = 3

}

variable "ssh-key" {

default = "ssh-rsa AAAA..."# (1)!

}

# Create the ressource for the ssh-key

resource "openstack_compute_keypair_v2" "ssh-key" {

name = "ssh-key"

public_key = var.ssh-key

}

|

You have to copy the entire public key on one line.

You have to copy the entire public key on one line.

Now, let's configure the networks for our clusters :

| networks.tf |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69 | ########################

# cluster1 network

########################

data "openstack_networking_network_v2" "cluster1" {

name = var.public-net

}

# Create cluster1 network

resource "openstack_networking_network_v2" "cluster1" {

name = "cluster1"

admin_state_up = "true"

}

# Create its subnet

resource "openstack_networking_subnet_v2" "cluster1" {

name = "cluster1"

network_id = openstack_networking_network_v2.cluster1.id

cidr = "10.0.0.0/24"

ip_version = 4

dns_nameservers = ["83.166.143.51", "83.166.143.52"]

}

# Create the router

resource "openstack_networking_router_v2" "cluster1" {

name = "cluster1"

admin_state_up = "true"

external_network_id = data.openstack_networking_network_v2.cluster1.id

}

# Plugging subnet and router

resource "openstack_networking_router_interface_v2" "cluster1" {

router_id = openstack_networking_router_v2.cluster1.id

subnet_id = openstack_networking_subnet_v2.cluster1.id

}

#########################

# Cluster2 network

#########################

data "openstack_networking_network_v2" "cluster2" {

name = var.public-net

}

# Create cluster2 network

resource "openstack_networking_network_v2" "cluster2" {

name = "cluster2"

admin_state_up = "true"

}

# Create cluster2 Subnet

resource "openstack_networking_subnet_v2" "cluster2" {

name = "cluster2"

network_id = openstack_networking_network_v2.cluster2.id

cidr = "11.0.0.0/24"

ip_version = 4

dns_nameservers = ["83.166.143.51", "83.166.143.52"]

}

# Create cluster2 router

resource "openstack_networking_router_v2" "cluster2" {

name = "cluster2"

admin_state_up = "true"

external_network_id = data.openstack_networking_network_v2.cluster2.id

}

# Plugging subnet and router

resource "openstack_networking_router_interface_v2" "cluster2" {

router_id = openstack_networking_router_v2.cluster2.id

subnet_id = openstack_networking_subnet_v2.cluster2.id

}

|

Then, create some security groups :

| sec_groups.tf |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77 | # ############################################

# Create Security Group For cluster1 Instances

# ############################################

resource "openstack_networking_secgroup_v2" "cluster1_sec_group" {

name = "cluster1"

description = "Security group for the cluster1 instances"

}

# Allow SSH

resource "openstack_networking_secgroup_rule_v2" "ssh-cluster1" {

direction = "ingress"

ethertype = "IPv4"

protocol = "tcp"

port_range_min = 22

port_range_max = 22

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.cluster1_sec_group.id

}

# Allow port 80

resource "openstack_networking_secgroup_rule_v2" "http-cluster1" {

direction = "ingress"

ethertype = "IPv4"

protocol = "tcp"

port_range_min = 80

port_range_max = 80

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.cluster1_sec_group.id

}

# Allow icmp

resource "openstack_networking_secgroup_rule_v2" "icmp-cluster1" {

direction = "ingress"

ethertype = "IPv4"

protocol = "icmp"

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.cluster1_sec_group.id

}

# ############################################

# Create Security Group For cluster2 Instances

# ############################################

resource "openstack_networking_secgroup_v2" "cluster2_sec_group" {

name = "cluster2"

description = "Security group for the cluster2 instances"

}

# Allow port 22

resource "openstack_networking_secgroup_rule_v2" "ssh-cluster2" {

direction = "ingress"

ethertype = "IPv4"

protocol = "tcp"

port_range_min = 22

port_range_max = 22

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.cluster2_sec_group.id

}

# Allow HTTP

resource "openstack_networking_secgroup_rule_v2" "http-cluster2" {

direction = "ingress"

ethertype = "IPv4"

protocol = "tcp"

port_range_min = 80

port_range_max = 80

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.cluster2_sec_group.id

}

# Allow icmp

resource "openstack_networking_secgroup_rule_v2" "icmp-cluster2" {

direction = "ingress"

ethertype = "IPv4"

protocol = "icmp"

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.cluster2_sec_group.id

}

|

Now, we are ready to configure our instances:

| instances.tf |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72 | ############################

# Create cluster 1 Instances

############################

# Create cluster 1 anti-affinity group (VMs in different zones)

resource "openstack_compute_servergroup_v2" "sg-anti-affinity-1" {

name = "sg-anti-affinity-1"

policies = ["soft-anti-affinity"]

}

# Create instances

resource "openstack_compute_instance_v2" "cluster1" {

count = var.number_of_instances

name = "cluster1-${count.index}"

flavor_name = var.cluster1_flavor_name

image_name = var.image_name

key_pair = openstack_compute_keypair_v2.ssh-key.name

security_groups = [openstack_networking_secgroup_v2.cluster1_sec_group.name]

network {

uuid = openstack_networking_network_v2.cluster1.id

}

depends_on = [openstack_networking_subnet_v2.cluster1]

scheduler_hints {

group = openstack_compute_servergroup_v2.sg-anti-affinity-1.id

}

user_data = <<-EOF

#!/bin/bash

sudo apt update -y

sudo apt install nginx -y

sudo systemctl start nginx

EOF

}

############################

# Create cluster 2 Instances

############################

# Create cluster 2 anti-affinity group (VMs in different zones)

resource "openstack_compute_servergroup_v2" "sg-anti-affinity-2" {

name = "sg-anti-affinity-2"

policies = ["soft-anti-affinity"]

}

# Create instances

resource "openstack_compute_instance_v2" "cluster2" {

count = var.number_of_instances

name = "cluster2-${count.index}"

flavor_name = var.cluster2_flavor_name

image_name = var.image_name

key_pair = openstack_compute_keypair_v2.ssh-key.name

security_groups = [openstack_networking_secgroup_v2.cluster2_sec_group.name]

network {

uuid = openstack_networking_network_v2.cluster2.id

}

depends_on = [openstack_networking_subnet_v2.cluster1]

scheduler_hints {

group = openstack_compute_servergroup_v2.sg-anti-affinity-1.id

}

user_data = <<-EOF

#!/bin/bash

sudo apt update -y

sudo apt install nginx -y

sudo systemctl enable nginx

sudo systemctl start nginx

EOF

}

|

Finally, we can add the LoadBalancers :

| loadbalancer.tf |

|---|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111 | ##############################

# CLUSTER1 HTTP LOAD BALANCER

##############################

# Create loadbalancer

resource "openstack_lb_loadbalancer_v2" "cluster1" {

name = "cluster1"

vip_subnet_id = openstack_networking_subnet_v2.cluster1.id

depends_on = [openstack_compute_instance_v2.cluster1]

}

resource "openstack_networking_floatingip_v2" "float_cluster1" {

pool = var.public-net

port_id = openstack_lb_loadbalancer_v2.cluster1.vip_port_id

}

# Create listener

resource "openstack_lb_listener_v2" "cluster1" {

name = "listener_cluster1"

protocol = "HTTP"

protocol_port = 80

loadbalancer_id = openstack_lb_loadbalancer_v2.cluster1.id

}

# Set load balancer mode to Round Robin between instances

resource "openstack_lb_pool_v2" "cluster1" {

name = "pool_cluster1"

protocol = "HTTP"

lb_method = "ROUND_ROBIN"

listener_id = openstack_lb_listener_v2.cluster1.id

}

# Add cluster1 instances to pool

resource "openstack_lb_member_v2" "cluster1" {

count = var.number_of_instances

address = element(openstack_compute_instance_v2.cluster1.*.access_ip_v4, count.index)

name = element(openstack_compute_instance_v2.cluster1.*.name, count.index)

protocol_port = 80

pool_id = openstack_lb_pool_v2.cluster1.id

subnet_id = openstack_networking_subnet_v2.cluster1.id

}

# Create health monitor checking services listening properly

resource "openstack_lb_monitor_v2" "cluster1" {

name = "monitor_cluster1"

pool_id = openstack_lb_pool_v2.cluster1.id

type = "HTTP"

delay = 2

timeout = 2

max_retries = 3

depends_on = [openstack_lb_member_v2.cluster1]

}

##############################

# CLUSTER2 HTTP LOAD BALANCER

##############################

resource "openstack_lb_loadbalancer_v2" "cluster2" {

name = "cluster2"

vip_subnet_id = openstack_networking_subnet_v2.cluster2.id

depends_on = [openstack_compute_instance_v2.cluster2]

}

resource "openstack_networking_floatingip_v2" "float_cluster2" {

pool = var.public-net

port_id = openstack_lb_loadbalancer_v2.cluster2.vip_port_id

}

# Create listener

resource "openstack_lb_listener_v2" "cluster2" {

name = "listener_cluster2"

protocol = "HTTP"

protocol_port = 80

loadbalancer_id = openstack_lb_loadbalancer_v2.cluster2.id

}

# Set load balancer mode to Round Robin between instances

resource "openstack_lb_pool_v2" "cluster2" {

name = "pool_cluster2"

protocol = "HTTP"

lb_method = "ROUND_ROBIN"

listener_id = openstack_lb_listener_v2.cluster2.id

}

# Add cluster2 instances to pool

resource "openstack_lb_member_v2" "cluster2" {

count = var.number_of_instances

address = element(openstack_compute_instance_v2.cluster2.*.access_ip_v4, count.index)

name = element(openstack_compute_instance_v2.cluster2.*.name, count.index)

protocol_port = 80

pool_id = openstack_lb_pool_v2.cluster2.id

subnet_id = openstack_networking_subnet_v2.cluster2.id

}

# Create health monitor checking services listening properly

resource "openstack_lb_monitor_v2" "cluster2" {

name = "monitor_cluster2"

pool_id = openstack_lb_pool_v2.cluster2.id

type = "HTTP"

delay = 2

timeout = 2

max_retries = 3

depends_on = [openstack_lb_member_v2.cluster2]

}

output "loadbalancers-ips" {

description = "Public IP of each Loadbalancer"

depends_on = [openstack_networking_floatingip_v2.float_cluster1, openstack_networking_floatingip_v2.float_cluster2]

value = {

"Public IP cluster1" = openstack_networking_floatingip_v2.float_cluster1.address

"Public IP cluster2" = openstack_networking_floatingip_v2.float_cluster2.address

}

}

|

Deploy to the public cloud

Now that our configuration, we may validate that everything is correct :

You should see an output telling that Success! The configuration is valid..

Finally, we can send our configuration to the public cloud:

This will preview the resources modifications.

When prompted, type yes to confirm the deployment.

> shellPlan: 41 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

OpenTofu will perform the actions described above.

Only 'yes' will be accepted to approve.

You should obtain a result like this:

> shellApply complete! Resources: 41 added, 0 changed, 0 destroyed.

Outputs:

loadbalancers-ips = {

"Public IP cluster1" = "195.a.b.c"

"Public IP cluster2" = "195.x.y.z"

}

You can use your web browser and check the public IPs serve the nginx welcome page.

Success

You now have two clusters successfully deployed with Terraform on

the Infomaniak Public Cloud.

In the next step, we will deploy one of our cluster in a different region.

This is only needed in older version of the Terraform provider for OpenStack.

You have to copy the entire public key on one line.